Publications

2025

Euclid Quick Data Release (Q1). Active galactic nuclei identification using diffusion-based inpainting of Euclid VIS images

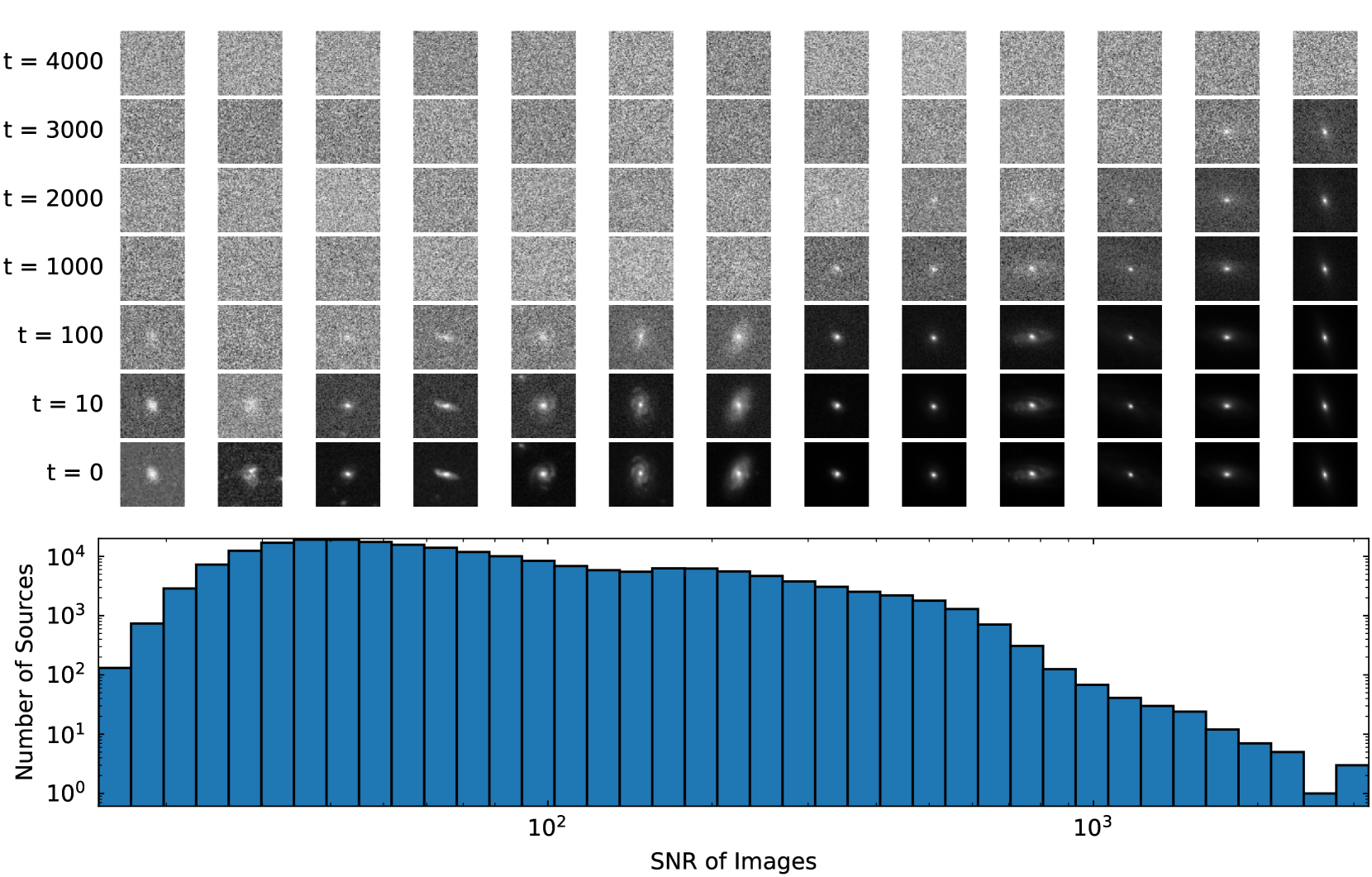

Astronomy & AstrophysicsLight emission from galaxies exhibit diverse brightness profiles, influenced by factors such as galaxy type, structural features and interactions with other galaxies. Elliptical galaxies feature more uniform light distributions, while spiral and irregular galaxies have complex, varied light profiles due to their structural heterogeneity and star-forming activity. In addition, galaxies with an active galactic nucleus (AGN) feature intense, concentrated emission from gas accretion around supermassive black holes, superimposed on regular galactic light, while quasi-stellar objects (QSO) are the extreme case of the AGN emission dominating the galaxy. The challenge of identifying AGN and QSO has been discussed many times in the literature, often requiring multi-wavelength observations. This paper introduces a novel approach to identify AGN and QSO from a single image. Diffusion models have been recently developed in the machine-learning literature to generate realistic-looking images of everyday objects. Utilising the spatial resolving power of the Euclid VIS images, we created a diffusion model trained on one million sources, without using any source pre-selection or labels. The model learns to reconstruct light distributions of normal galaxies, since the population is dominated by them. We condition the prediction of the central light distribution by masking the central few pixels of each source and reconstruct the light according to the diffusion model. We further use this prediction to identify sources that deviate from this profile by examining the reconstruction error of the few central pixels regenerated in each source's core. Our approach, solely using VIS imaging, features high completeness compared to traditional methods of AGN and QSO selection, including optical, near-infrared, mid-infrared, and X-rays.@misc{stevens2025EuclidInpaintingAGN, author = {{Stevens}, G. and {Fotopoulou}, S. and {Bremer}, M.~N. and {Matamoro Zatarain}, T. and {Jahnke}, K. and {Margalef-Bentabol}, B. and {Huertas-Company}, M. and {Smith}, M.~J. and {Walmsley}, M. and {Salvato}, M. and {Mezcua}, M. and {Paulino-Afonso}, A. and {Siudek}, M. and {Talia}, M. and {Ricci}, F. and {Roster}, W. and the {Euclid Collaboration}.}, title = "{Euclid Quick Data Release (Q1). Active galactic nuclei identification using diffusion-based inpainting of Euclid VIS images}", journal={Astronomy \& Astrophysics}, year={2025}, publisher={EDP sciences}, DOI="10.1051/0004-6361/202554612", }

- We present VibeSpace, a novel method for the fully unsupervised construction of interpretable embedding spaces applicable to arbitrary domains. Our approach automates costly data acquisition by leveraging the knowledge embedded in large language models (LLMs), facilitating similarity assessments between entities for meaningful positioning within vector spaces, while also enabling intelligent mappings between vector space representations of disparate domains through a novel form of cross-domain similarity analysis. First, we demonstrate that our data collection methodology yields comprehensive and rich datasets across multiple domains, including songs, books, and movies. We validate the reliability of the automatically generated data via cross-checks with domain-specific catalogues. Second, we show that our method generates single-domain embedding spaces that are separable by domain-specific features, providing a robust foundation for classification tasks, recommendation systems, and other downstream applications. These spaces can be interactively queried for semantic information about different regions in embedding spaces. Lastly, by exploiting the unique capabilities of current state-of-the-art large language models, we produce cross-domain mappings that capture contextual relationships between heterogeneous entities that may not be attainable through traditional methods. This approach facilitates the creation of embedding spaces of any domain, which circumvents the need to collect and calibrate sensitive user data and provides deeper insights and better interpretations of multi-domain data.@inproceedings{Freud2025vibespace, author = {Freud, K. and Collins, D. and Sampaio Neto, D.D. and Stevens, G.}, title = {VibeSpace: Automatic Generation of Data and Vector Embeddings for Arbitrary Domains and Cross-domain Mappings using LLMs}, year = {2025}, publisher = {Association for Computing Machinery}, doi = {10.1145/3746027.3755830}, pages = {6335-6342}, numpages = {8}, keywords = {data mining, large language model distillation, recommendation}, series = {MM `25}, }

![Summary of the strategies in MSF. In bold are our contributions. We extend the single-output Rectify strategy into its multi-output [13] variant, analogous to RecMO, DirMO [10], and DirRecMO [9]. Stratify is a framework which generalises all existing strategies and introduces novel strategies with improved performance. Lines show the evolution and fusion of previous strategies to form new ones.](https://media.springernature.com/full/springer-static/image/art%3A10.1007%2Fs10618-025-01135-1/MediaObjects/10618_2025_1135_Fig1_HTML.png)

Stratify: Unifying Multi-Step Forecasting Strategies

ECML / Data Mining and Knowledge DiscoveryA key aspect of temporal domains is the ability to make predictions multiple time-steps into the future, a process known as multi-step forecasting (MSF). At the core of this process is selecting a forecasting strategy; however, with no existing frameworks to map out the space of strategies, practitioners are left with ad-hoc methods for strategy selection. In this work, we propose Stratify, a parameterised framework that addresses multi-step forecasting, unifying existing strategies and introducing novel, improved strategies. We evaluate Stratify on 18 benchmark datasets, five function classes, and short to long forecast horizons (10, 20, 40, 80) in the univariate setting. In over 84% of 1080 experiments, novel strategies in Stratify improved performance compared to all existing ones. Importantly, we find that no single strategy consistently outperforms others in all task settings, highlighting the need for practitioners to explore the Stratify space to carefully search and select forecasting strategies based on task-specific requirements. Our results are the most comprehensive benchmarking of known and novel forecasting strategies. We share our code to reproduce our results.@article{green2025stratify, title = {Stratify: unifying multi-step forecasting strategies}, volume = {39}, issn = {1573-756X}, shorttitle = {Stratify}, doi = {10.1007/s10618-025-01135-1}, number = {5}, journal = {Data Mining and Knowledge Discovery}, author = {Green, Riku and Stevens, Grant and Abdallah, Zahraa S. and Silva Filho, Telmo M.}, month = aug, year = {2025}, pages = {64}}

Euclid Quick Data Release (Q1). First Euclid statistical study of galaxy mergers and their connection to active galactic nuclei

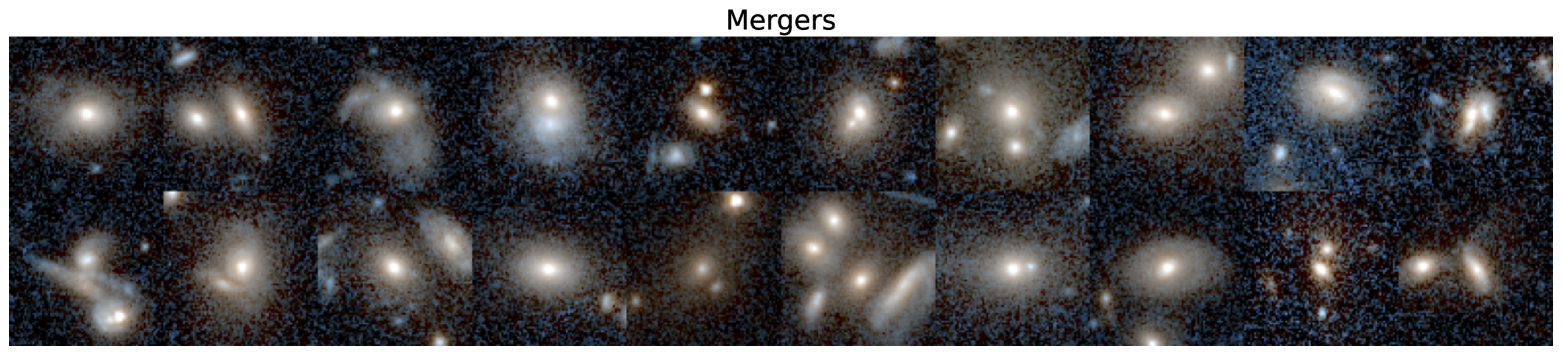

Astronomy & AstrophysicsGalaxy major mergers are indicated as one of the principal pathways to trigger active galactic nuclei (AGN). We present the first detection of major mergers in the Euclid Deep Fields and analyse their connection with AGN, showcasing the statistical power of the Euclid data. We constructed a stellar-mass-complete (M⋆>109.8 M⊙) sample of galaxies from the first quick data release (Q1), in the redshift range z=0.5-2. We selected AGN using X-ray detections, optical spectroscopy, mid-infrared (MIR) colours, and processing IE observations with an image decomposition algorithm. We used convolutional neural networks trained on cosmological hydrodynamic simulations to classify galaxies as mergers and non-mergers. We found a larger fraction of AGN in mergers compared to the non-merger controls for all AGN selections, with AGN excess factors ranging from 2 to 6. The largest excess is seen in the MIR-selected AGN. Likewise, a generally larger merger fraction (fmerg) is seen in active galaxies than in the non-active controls, with the excess depending on the AGN selection method. Furthermore, we analysed fmerg as a function of the AGN bolometric luminosity (Lbol) and the contribution of the point-source component to the total galaxy light in the IE-band (fPSF) as a proxy for the relative AGN contribution fraction. We uncovered a rising fmerg, with increasing fPSF up to fPSF≃0.55, after which we observed a decreasing trend. In the range fPSF=0.3-0.7, mergers appear to be the dominant AGN fuelling mechanism. We then derived the point-source luminosity (LPSF) and showed that fmerg monotonically increases as a function of LPSF at z<0.9, with fmerg≥50% for LPSF≃2x1043 erg/s. Similarly, at 0.9≤z≤2, fmerg rises as a function of LPSF, though mergers do not dominate until LPSF≃1045 erg/s. For the X-ray and spectroscopically detected AGN we derived the bolometric luminosity, Lbol, which has a positive correlation with fmerg for X-ray AGN, while there is a less pronounced trend for spectroscopically-selected AGN due to the smaller sample size. At Lbol>1045 erg/s, AGN mostly reside in mergers. We concluded that mergers are most strongly associated with the most powerful and dust-obscured AGN, typically linked to a fast-growing phase of the supermassive black hole, while other mechanisms, such as secular processes, might be the trigger of less luminous and dominant AGN.

@article{2025LaMarcaMergers, title = {Euclid Quick Data Release (Q1). First Euclid statistical study of galaxy mergers and their connection to active galactic nuclei}, author = {{La Marca}, A. and {Wang}, L. and {Margalef-Bentabol}, B. and {Gabarra}, L. and {Toba}, Y. and {Mezcua}, M. and {Rodriguez-Gomez}, V. and {Ricci}, F. and {Fotopoulou}, S. and {Matamoro Zatarain}, T. and {Allevato}, V. and {La Franca}, F. and {Shankar}, F. and {Bisigello}, L. and G. {Stevens} G. and {Siudek}, M. and {Roster}, W. and {Salvato}, M. and {Tortora}, C. and {Spinoglio}, L. and {Man}, A.W.S. and {Knapen}, J.H. and {Baes}, M. and {O\'Ryan}, D. and the {Euclid Collaboration}.}, journal={Astronomy \& Astrophysics}, year={2025}, publisher={EDP sciences}, DOI="10.1051/0004-6361/202554579", }

Euclid Quick Data Release (Q1). The active galaxies of Euclid

Astronomy & AstrophysicsWe present a catalogue of candidate active galactic nuclei (AGN) in the Euclid Quick Release (Q1) fields. For each Euclid source we collect multi-wavelength photometry and spectroscopy information from Galaxy Evolution Explorer (GALEX), Gaia, Dark Energy Survey (DES), Wise-field Infrared Survey Explorer (WISE), Spitzer, Dark Energy Survey (DESI), and Sloan Digital Sky Survey (SDSS), including spectroscopic redshift from public compilations. We investigate the AGN contents of the Q1 fields by applying selection criteria using Euclid colours and WISE-AllWISE cuts finding respectively 292,222 and 65,131 candidates. We also create a high-purity QSO catalogue based on Gaia DR3 information containing 1971 candidates. Furthermore, we utilise the collected spectroscopic information from DESI to perform broad-line and narrow-line AGN selections, leading to a total of 4392 AGN candidates in the Q1 field. We investigate and refine the Q1 probabilistic random forest QSO population, selecting a total of 180,666 candidates. Additionally, we perform SED fitting on a subset of sources with available zspec, and by utilizing the derived AGN fraction, we identify a total of 7766 AGN candidates. We discuss purity and completeness of the selections and define two new colour selection criteria (JH_IEY and IEH_gz) to improve on purity, finding 313,714 and 267,513 candidates respectively in the Q1 data. We find a total of 229,779 AGN candidates equivalent to an AGN surface density of 3641 deg-2 for 18<IE≤24.5, and a subsample of 30,422 candidates corresponding to an AGN surface density of 482 deg-2 when limiting the depth to 18<IE≤22. The surface density of AGN recovered from this work is in line with predictions based on the AGN X-ray luminosity functions.

@article{2025MatamoroZatarainActive, title = {Euclid Quick Data Release (Q1). The active galaxies of Euclid}, author = {{Matamoro Zatarain}, T. and {Fotopoulou}, S. and {Ricci}, F. and {Bolzonella}, M. and {La Franca}, F. and {Viitanen}, A. and {Zamorani}, G. and {Taylor}, M.B. and {Mezcua}, M. and {Laloux}, B. and {Bongiorno}, A. and {Jahnke}, K. and {Stevens}, G. and {Shaw}, R.~A. and {Bisigello}, L. and {Roster}, W. and {Fu}, Y. and {Margalef-Bentabol}, B. and {La Marca}, A. and {Tarsitano}, F. and {Feltre}, A. and {Calhau}, J. and {Lopez Lopez}, X. and {Scialpi}, M. and {Salvato}, M. and {Allevato}, V. and {Siudek}, M. and {Saulder}, C. and {Vergani}, D. and {Bremer}, M.~N. and {Wang}, L. and {Giulietti}, M. and {Alexander}, D.~M. and {Sluse}, D. and {Shankar}, F. and {Spinoglio}, L. and {Scott}, D. and {Shirley}, R. and {Landt}, H. and {Selwood}, M. and {Toba}, Y. and {Dayal}, P. and the {Euclid Collaboration}}, journal={Astronomy \& Astrophysics}, year={2025}, publisher={EDP sciences}, DOI= "10.1051/0004-6361/202554619", }

Euclid Quick Data Release (Q1) Exploring galaxy properties with a multi-modal foundation model

Astronomy & AstrophysicsModern astronomical surveys, such as the Euclid mission, produce high-dimensional, multi-modal data sets that include imaging and spectroscopic information for millions of galaxies. These data serve as an ideal benchmark for large, pre-trained multi-modal models, which can leverage vast amounts of unlabelled data. In this work, we present the first exploration of Euclid data with AstroPT, an autoregressive multi-modal foundation model trained on approximately 300000 optical and infrared Euclid images and spectral energy distributions (SEDs) from the first Euclid Quick Data Release. We compare self-supervised pre-training with baseline fully supervised training across several tasks: galaxy morphology classification; redshift estimation; similarity searches; and outlier detection. Our results show that: (a) AstroPT embeddings are highly informative, correlating with morphology and effectively isolating outliers; (b) including infrared data helps to isolate stars, but degrades the identification of edge-on galaxies, which are better captured by optical images; (c) simple fine-tuning of these embeddings for photometric redshift and stellar mass estimation outperforms a fully supervised approach, even when using only 1% of the training labels; and (d) incorporating SED data into AstroPT via a straightforward multi-modal token-chaining method improves photo-z predictions, and allow us to identify potentially more interesting anomalies (such as ringed or interacting galaxies) compared to a model pre-trained solely on imaging data.@article{Siudek2025EuclidFoundation, author = {{Siudek}, M. and {Huertas-Company}, M. and {Smith}, M. and {Martinez-Solaeche}, G. and {Lanusse}, F. and {Ho}, S. and {Angeloudi}, E. and {Cunha}, P.~A.~C. and {Domínguez Sánchez}, H. and {Dunn}, M. and {Fu}, Y. and {Iglesias-Navarro}, P. and {Junais}, J. and {Knapen}, J.~H. and {Laloux}, B. and {Mezcua}, M. and {Roster}, W. and {Stevens}, G. and {Vega-Ferrero}, J. and the {Euclid Collaboration}.}, title = "{Euclid Quick Data Release (Q1) Exploring galaxy properties with a multi-modal foundation model}", journal={Astronomy \& Astrophysics}, year={2025}, publisher={EDP sciences}, DOI= "10.1051/0004-6361/202554611", }

Towards Foundational Models for Dynamical System Reconstruction: Hierarchical Meta-Learning via Mixture of Experts

ICLR 2025 - First Workshop on Scalable Optimization for Efficient and Adaptive Foundation ModelsAs foundational models reshape scientific discovery, a bottleneck persists in dynamical system reconstruction (DSR): the ability to learn across system hierarchies. Many meta-learning approaches have been applied successfully to single systems, but falter when confronted with sparse, loosely related datasets requiring multiple hierarchies to be learned. Mixture of Experts (MoE) offers a natural paradigm to address these challenges. Despite their potential, we demonstrate that naive MoEs are inadequate for the nuanced demands of hierarchical DSR, largely due to their gradient descent-based gating update mechanism which leads to slow updates and conflicted routing during training. To overcome this limitation, we introduce MixER: Mixture of Expert Reconstructors, a novel sparse top-1 MoE layer employing a custom gating update algorithm based on K-means and least squares. Extensive experiments validate MixER's capabilities, demonstrating efficient training and scalability to systems of up to ten parametric ordinary differential equations. However, our layer underperforms state-of-the-art meta-learners in high-data regimes, particularly when each expert is constrained to process only a fraction of a dataset composed of highly related data points. Further analysis with synthetic and neuroscientific time series suggests that the quality of the contextual representations generated by MixER is closely linked to the presence of hierarchical structure in the data.@inproceedings{nzoyemmixer, title={Towards Foundational Models for Dynamical System Reconstruction: Hierarchical Meta-Learning via Mixture of Experts}, author={{Desmond Nzoyem}, R. and {Stevens}, G. and {Sahota}, A. and {Barton}, D.AW and {Deakin}, T.}, booktitle={First Workshop on Scalable Optimization for Efficient and Adaptive Foundation Models, ICLR 2025} }

2024

Euclid preparation - XLIII. Measuring detailed galaxy morphologies for Euclid with machine learning

Astronomy & AstrophysicsThe Euclid mission is expected to image millions of galaxies at high resolution, providing an extensive dataset with which to study galaxy evolution. Because galaxy morphology is both a fundamental parameter and one that is hard to determine for large samples, we investigate the application of deep learning in predicting the detailed morphologies of galaxies in Euclid using Zoobot, a convolutional neural network pretrained with 450 000 galaxies from the Galaxy Zoo project. We adapted Zoobot for use with emulated Euclid images generated based on Hubble Space Telescope COSMOS images and with labels provided by volunteers in the Galaxy Zoo: Hubble project. We experimented with different numbers of galaxies and various magnitude cuts during the training process. We demonstrate that the trained Zoobot model successfully measures detailed galaxy morphology in emulated Euclid images. It effectively predicts whether a galaxy has features and identifies and characterises various features, such as spiral arms, clumps, bars, discs, and central bulges. When compared to volunteer classifications, Zoobot achieves mean vote fraction deviations of less than 12% and an accuracy of above 91% for the confident volunteer classifications across most morphology types. However, the performance varies depending on the specific morphological class. For the global classes, such as disc or smooth galaxies, the mean deviations are less than 10%, with only 1000 training galaxies necessary to reach this performance. On the other hand, for more detailed structures and complex tasks, such as detecting and counting spiral arms or clumps, the deviations are slightly higher, of namely around 12% with 60 000 galaxies used for training. In order to enhance the performance on complex morphologies, we anticipate that a larger pool of labelled galaxies is needed, which could be obtained using crowd sourcing. We estimate that, with our model, the detailed morphology of approximately 800 million galaxies of the Euclid Wide Survey could be reliably measured and that approximately 230 million of these galaxies would display features. Finally, our findings imply that the model can be effectively adapted to new morphological labels. We demonstrate this adaptability by applying Zoobot to peculiar galaxies. In summary, our trained Zoobot CNN can readily predict morphological catalogues for Euclid images.@article{ Aussel2024euclid, title={Euclid preparation-XLIII. Measuring detailed galaxy morphologies for Euclid with machine learning}, author={{Aussel}, B. and {Kruk}, S. and {Walmsley}, M. and {Castellano}, M. and {Conselice}, C.J. and {Delli Veneri}, M. and {Dominguez Sanchez}, H. and {Duc}, P.-A. and {Knapen}, J.H. and {Kuchner}, U. and {La Marca}, A. and {Margalef-Bentabol}, B. and {Marleau}, F.R. and {Stevens}, G. and {Toba}, Y. and {Tortora}, C. and {Wang}, L. and the {Euclid Collaboration}}, journal={Astronomy \& Astrophysics}, volume={689}, pages={A274}, year={2024}, publisher={EDP sciences}, DOI= "10.1051/0004-6361/202449609", }

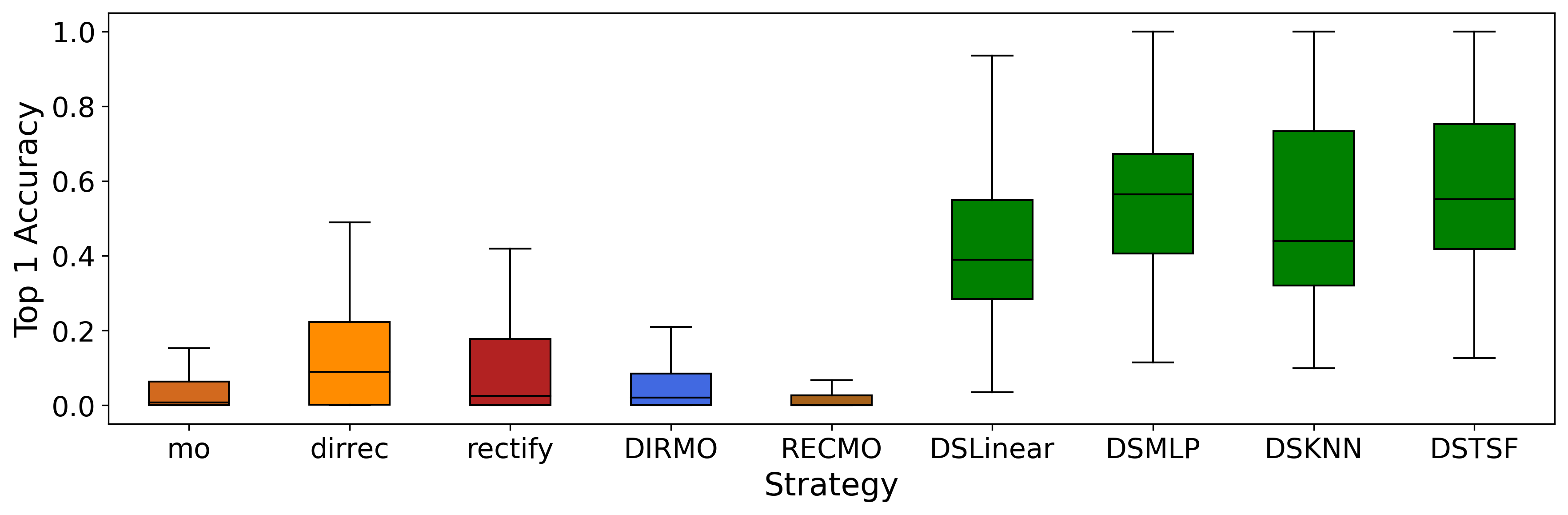

Time-Series Classification for Dynamic Strategies in Multi-Step Forecasting

Arxiv PreprintMulti-step forecasting (MSF) in time-series, the ability to make predictions multiple time steps into the future, is fundamental to almost all temporal domains. To make such forecasts, one must assume the recursive complexity of the temporal dynamics. Such assumptions are referred to as the forecasting strategy used to train a predictive model. Previous work shows that it is not clear which forecasting strategy is optimal a priori to evaluating on unseen data. Furthermore, current approaches to MSF use a single (fixed) forecasting strategy. In this paper, we characterise the instance-level variance of optimal forecasting strategies and propose Dynamic Strategies (DyStrat) for MSF. We experiment using 10 datasets from different scales, domains, and lengths of multi-step horizons. When using a random-forest-based classifier, DyStrat outperforms the best fixed strategy, which is not knowable a priori, 94% of the time, with an average reduction in mean-squared error of 11%. Our approach typically triples the top-1 accuracy compared to current approaches. Notably, we show DyStrat generalises well for any MSF task.@misc{green2024timeseries, title={Time-Series Classification for Dynamic Strategies in Multi-Step Forecasting}, author={{Green}, R. and {Stevens}, G. and {Abdallah}, Z. and {de Menezes e Silva Filho}, T.}, year={2024}, eprint={2402.08373} }

2021

AstronomicAL: an interactive dashboard for visualisation, integration and classification of data with Active Learning

Journal for Open Source SoftwareAstronomicAL is a human-in-the-loop interactive labelling and training dashboard that allows users to create reliable datasets and robust classifiers using active learning. This technique prioritises data that offer high information gain, leading to improved performance using substantially less data. The system allows users to visualise and integrate data from different sources and deal with incorrect or missing labels and imbalanced class sizes. AstronomicAL enables experts to visualise domain-specific plots and key information relating both to broader context and details of a point of interest drawn from a variety of data sources, ensuring reliable labels. In addition, AstronomicAL provides functionality to explore all aspects of the training process, including custom models and query strategies. This makes the software a tool for experimenting with both domain-specific classifications and more general-purpose machine learning strategies. We illustrate using the system with an astronomical dataset due to the field’s immediate need; however, AstronomicAL has been designed for datasets from any discipline. Finally, by exporting a simple configuration file, entire layouts, models, and assigned labels can be shared with the community. This allows for complete transparency and ensures that the process of reproducing results is effortless.@article{Stevens_2021, doi = {10.21105/joss.03635}, year = 2021, month = {sep}, publisher = {The Open Journal}, volume = {6}, number = {65}, pages = {3635}, author = {{Stevens}, G. and {Fotopoulou}, S. and {Bremer}, M.~N. and {Ray}, O.}, title = {{AstronomicAL}: an interactive dashboard for visualisation, integration and classification of data with Active Learning}, journal = {Journal of Open Source Software} }